Greetings, peoples. As you probably know, these words are served to you by Tekuti, a blog engine written in Scheme that uses Git as its database.

Part of the reason I wrote this blog software was that from the time when I was using Wordpress, I actually appreciated the comments that I would get. Sometimes nice folks visit this blog and comment with information that I find really interesting, and I thought it would be a shame if I had to disable those entirely.

But allowing users to add things to your site is tricky. There are all kinds of potential security vulnerabilities. I thought about the ones that were important to me, back in 2008 when I wrote Tekuti, and I thought I did a pretty OK job on preventing XSS and designing-out code execution possibilities. When it came to bogus comments though, things worked well enough for the time. Tekuti uses Git as a log-structured database, and so to delete a comment, you just revert the change that added the comment. I added a little security question ("what's your favorite number?"; any number worked) to prevent wordpress spammers from hitting me, and I was good to go.

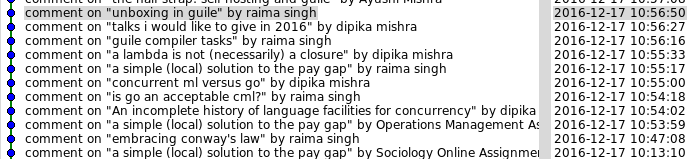

Sadly, what was good enough in 2008 isn't good enough in 2017. In 2017 alone, some 2000 bogus comments made it through. So I took comments offline and painstakingly went through and separated the wheat from the chaff while pondering what to do next.

an aside

I really wondered why spammers bothered though. I mean, I added the rel="external nofollow" attribute on links, which should prevent search engines from granting relevancy to the spammer's links, so what gives? Could be that all the advice from the mid-2000s regarding nofollow is bogus. But it was definitely the case that while I was adding the attribute to commenter's home page links, I wasn't adding it to links in the comment. Doh! With this fixed, perhaps I will just have to deal with the spammers I have and not even more spammers in the future.

i digress

I started by simply changing my security question to require a number in a certain range. No dice; bogus comments still got through. I changed the range; could it be the numbers they were using were already in range? Again the bogosity continued undaunted.

So I decided to break down and write a bogus comment filter. Luckily, Git gives me a handy corpus of legit and bogus comments: all the comments that remain live are legit, and all that were ever added but are no longer live are bogus. I wrote a simple tokenizer across the comments, extracted feature counts, and fed that into a naive Bayesian classifier. I finally turned it on this morning; fingers crossed!

My trials at home show that if you train the classifier on half the data set (around 5300 bogus comments and 1900 legit comments) and then run it against the other half, I get about 6% false negatives and 1% false positives. The feature extractor interns sequences of 1, 2, and 3 tokens, and doesn't have a lower limit for number of features extracted -- a feature seen only once in bogus comments and never in legit comments is a fairly strong bogosity signal; as you have to make up the denominator in that case, I set it to indicate that such a feature is 99.9% bogus. A corresponding single feature in the legit set without appearance in the bogus set is 99% legit.

Of course with this strong of a bias towards precise features of the training set, if you run the classifier against its own training set, it produces no false positives and only 0.3% false negatives, some of which were simply reverted duplicate comments.

It wasn't straightforward to get these results out of a Bayesian classifier. The "smoothing" factor that you add to both numerator and denominator was tricky, as I mentioned above. Getting a useful tokenization was tricky. And the final trick was even trickier: limiting the significant-feature count when determining bogosity. I hate to cite Paul Graham but I have to do so here -- choosing the N most significant features in the document made the classification much less sensitive to the varying lengths of legit and bogus comments, and less sensitive to inclusions of verbatim texts from other comments.

We'll see I guess. If your comment gets caught by my filters, let me know -- over email or Twitter I guess, since you might not be able to comment! I hope to be able to keep comments open; I've learned a lot from yall over the years.