Guile-GNOME was the first object-oriented framework that I had ever worked with in Scheme. I came to it with all kinds of bogus ideas, mostly inherited from my C to Python formational trajectory. I'd like to discuss one of those today: the object closure. That is, if an object is code bound up with data, how does the code have access to data?

In C++, object closure is a non-problem. If you have an object, w, and you want to access some data associated with it, you dereference the widget structure to reach the member that you need:

char *str = w->name;

Since the compiler knows the type of w, it knows the exact layout of the memory pointed to by w. The ->name dereference compiles into a memory fetch from a fixed offset from the widget pointer.

In constrast, data access in Python is computationally expensive. A simple expression like w.name must perform the following steps:

look up the class of w (call it W)

loop through all of the classes in W's "method resolution order" --- an ordered set of all of W's superclasses --- to see if the class defines a "descriptor" for this property. In some cases, this descriptor might be called to get the value for name.

find the "dictionary", a hash table, associated with w. If the dictionary contains a value for name, return that.

otherwise if there was a descriptor, call the descriptor to see what to do.

This process is run every time you see a . between two letters in python. OK, so getattr does have an opcode to itself in CPython's VM instruction set, and the above code is implemented mostly in C (see Objects/object.c:PyObject_GenericGetAttr). But that's about as fast as it can possibly get, because the structure of the Python language definition prohibits any implementation of Python from ever having enough information to implement the direct memory access that is possible in C++.

But, you claim, that's just what you get when you program in a dynamic language! What do you want to do, go back to C++?

straw man says nay

"First, do no harm", said a practitioner of another profession. Fundamental data structures should be chosen in such a way that needed optimizations are possible. Constructs such as Python's namespaces-as-dicts actively work against important optimizations, effectively putting an upper bound on how fast code can run.

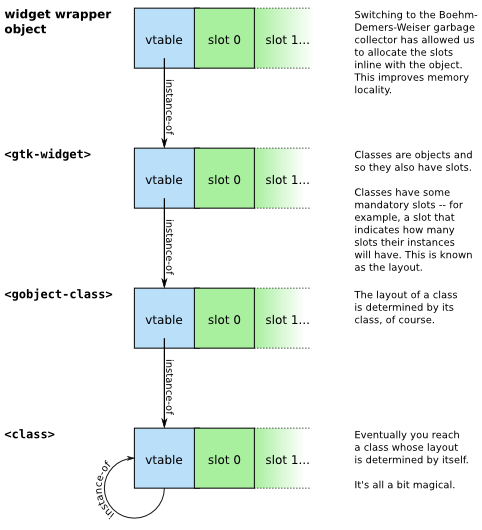

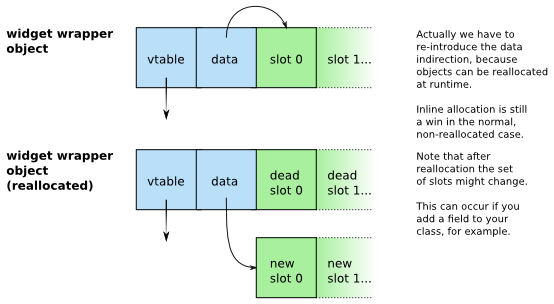

So for example in the case of the object closure, if we are to permit direct memory access, we should allow data to be allocated at a fixed offset into the object's memory area.

Then, the basic language constructs that associate names with values should be provided in such a way that the compiler can determine what the offset is for each data element.

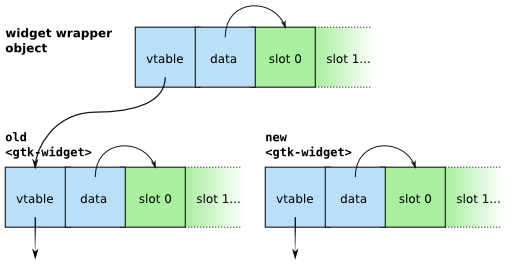

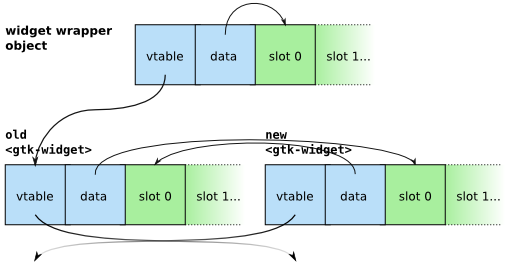

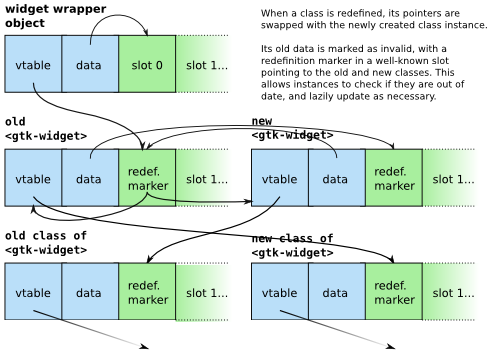

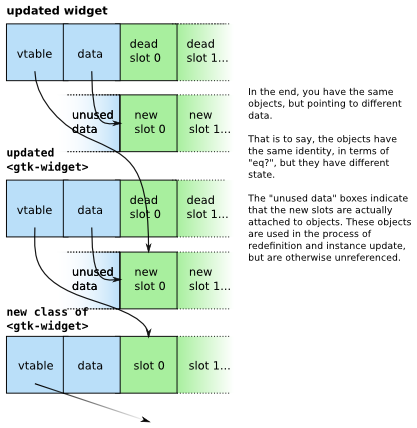

In dynamic languages, types and methods are defined and redefined at runtime. New object layouts come into being, and methods which operated on layouts of one type will see objects of new types as the program evolves. All of this means that to maintain this direct-access characteristic, the compiler must be present at runtime as well.

So, in my silly w.name example, there are two cases: one, in which the getattr method is seeing the combination of the class W and the slot name for the first time, and one in which we have seen this combination already. In the first case, the compiler runs, associating this particular combination of types with a new procedure, newly compiled to perform the direct access corresponding to where the name slot is allocated in instances of type W. Once this association is established, or looked up as in the second case, we jump into the compiled access procedure.

Note that at this point, we haven't specified what the relationship is between layouts and subclassing. We could further specify that subclasses cannot alter the layout of slots defined by superclasses. Or, we could just leave it as it is, which is what Guile does.

Guile, you say? That slow, interpreted Scheme implementation? Well yes, I recently realized (read: was told) that Guile in fact implements this exact algorithm for dispatching its generic functions. Slot access does indeed compile down to direct access, as far as can be done in a semi-interpreted Scheme, anyway. The equivalent of the __mro__ traversal mentioned in the above description of python's getattr, which would be performed by slot-ref, is compiled out in Guile's slot accessor generics.

In fact, as a theoretical aside, since Guile dispatches lazily on the exact types of the arguments given to generic functions (and not just the specializer types declared on the individual methods), it can lazily compile methods knowing exactly what types they are operating on, with all the possiblities for direct access and avoidance of typechecking that that entails. But this optimization has not yet entered the realm of practice.

words on concision

Python did get one thing right, however: objects' code access their data via a single character.

It is generally true that we tend to believe that the expense of a programming construct is proportional to the amount of writer's cramp that it causes us (by "belief" I mean here an unconscious tendency rather than a fervent conviction). Indeed, this is not a bad psychological principle for language designers to keep in mind. We think of addition as cheap partly because we can notate it with a single character: "+". Even if we believe that a construct is expensive, we will often prefer it to a cheaper one if it will cut our writing effort in half.

Guy Steele, Debunking the 'Expensive Procedure Call' Myth, or, Procedure Call Implementations Considered Harmful, or, Lambda: The Ultimate GOTO (p.9)

Since starting with Guile, over 5 years ago now, I've struggled a lot with object-oriented notation. The problem has been to achieve that kind of Python-like concision while maintaining schemeliness. I started with the procedural slot access procedures:

(slot-ref w 'name)

(slot-set! w 'name "newname")

But these procedures are ugly and verbose. Besides that, since they are not implemented as generic functions, they prevent the lazy compilation mentioned above.

GOOPS, Guile's object system, does allow you to define slot accessor generic functions. So when you define the class, you pass the #:accessor keyword inside the slot definition:

(define-class <foo> ()

(bar #:init-keyword #:bar #:accessor bar))

(define x (make <foo> #:bar 3))

(bar x) => 3

(set! (bar x) 4)

Now for me, typographically, this is pretty good. In addition, it's compilable, as mentioned above, and it's mappable: one can (map bar list-of-x), which compares favorably to the Python equivalent, [x.name for x in list_of_x].

My problem with this solution, however, is its interaction with namespaces and modules. Suppose that your module provides the type, <foo>, or, more to the point, <gtk-window>. If <gtk-window> has 54 slots, and you define accessors for all of those slots, you have to export 54 more symbols as part of your module's interface.

This heavy "namespace footprint" is partly psychological, and partly real.

It is "only" psychological inasmuch as methods of generic functions do not "occupy" a whole name; they only specify what happens when a procedure is called with particular types of arguments. Thus, if opacity is an accessor, it doesn't occlude other procedures named opacity, it just specifies what happens when you call (opacity x) for certain types of x. It does conflict with other types of interface exports however (variables, classes, ...), although classes have their own <typographic-convention>. *Global-variables* do as well, and other kinds of exports are not common. So in theory the footprint is small.

On the other hand, there are real impacts to reading code written in this style. You read the code and think, "where does bar come from?" This mental computation is accompanied with machine computation. First, because in a Scheme like Guile that starts from scratch every time it's run, the accessor procedures have to be allocated and initialized every time the program runs. (The alternatives would be an emacs-like dump procedure, or R6RS-like separate module compilation.) Second, because the (drastically) increased number of names in the global namespace slows down name resolution.

lexical accessors

Recently, I came upon a compromise solution that works well for me: the with-accessors macro. For example, to scale the opacity of a window by a ratio, you could do it like this:

(define (scale-opacity w ratio)

(with-accessors (opacity)

(set! (opacity w)

(* (opacity w) ratio))))

This way you have all of the benefits of accessors, with the added benefit that you (and the compiler) can see lexically where the opacity binding comes from.

Well, almost all of the benefits, anyway: for various reasons, for this construct to be implemented with accessors, Guile would need to support subclasses of generic functions, which is does not yet. But the user-level code is correct.

Note that opacity works on instances of any type that has an opacity slot, not just windows.

Also note that the fact that we allow slots to be allocated in the object's memory area does not prohibit other slot allocations. In the case of <gtk-window>, the getters and setters for the opacity slot actually manipulate the opacity GObject property. As you would expect, no memory is allocated for the slot in the Scheme wrapper.

For posterity, here is a defmacro-style definition of with-accessors, for Guile:

(define-macro (with-accessors names . body)

`(let (,@(map (lambda (name)

`(,name ,(make-procedure-with-setter

(lambda (x) (slot-ref x name))

(lambda (x y) (slot-set! x name y)))))

names))

,@body))

final notes

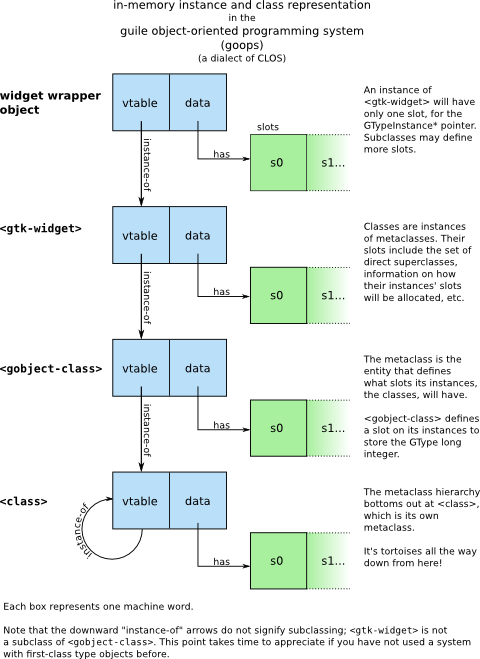

Interacting with a system with a meta-object protocol has been a real eye-opener for me. Especially interesting has been the interplay between the specification, which specifies the affordances of the object system, and the largely unwritten "negative specification", which is the set of optimizations that the specification hopes to preserve. Interested readers may want to check out Gregor Kiczales' work on meta-object protocols, the canonical work being his "The Art of the Metaobject Protocol". All of Kiczales' work is beautiful, except the aspect-oriented programming stuff.

For completeness, I should mention the java-dot notation, which has been adopted by a number of lispy languages targetting the JVM or the CLR. Although I guess it meshes well with the underlying library systems, I find it to be ugly and non-Schemey.

And regarding Python, lest I be accused of ignoring __slots__: the getattr lookup process described is the same, even if your class defines __slots__. The __slots__ case is handled by descriptors in step 2. This is specified in the language definition. If slots were not implemented using descriptors, then you would still have to do the search to see if there were descriptors, although some version of the lazy compilation technique could apply.