My previous installments on November's GNU Hackers Meeting (hither, and thither) touched some topics that were important to me, but not as important as the one I'll mention tonight.

Tonight I want to talk about autonomy and the internet. I'll approach it from a roundabout direction.

the facebook problem

Many of you probably know someone who has had their Facebook account disabled. Here are a couple, and here are some thousands more. While I'm probably not the best person to speak of this, as I don't have a Facebook account, it's quite irritating to have this happen. It's like you've been unpersoned.

Beyond the individual indignation though, what is really important (and sometimes missing) is a more universal indignation: never mind me, what gives a corporation the right to unperson anyone?

Sure, I hear you arguing that it's their services, bla bla, but the end of it is that when you use Facebook, you lose autonomy -- communication and identity are needs just like any other.

I might be going out on a limb here, but consider Article 19 of the fine UN Declaration of Human Rights:

Everyone has the right to freedom of opinion and expression; this right includes freedom to hold opinions without interference and to seek, receive and impart information and ideas through any media and regardless of frontiers.

Emphasis mine, of course. What I'm saying is that you shouldn't depend on the government or a corporation or any other entity outside your actual community to be able to actualize these natural rights.

and further: article 12

Though I don't like the wording of this one as much as the previous article, nor the gendered pronouns, check it:

No one shall be subjected to arbitrary interference with his privacy, family, home or correspondence, nor to attacks upon his honour and reputation. Everyone has the right to the protection of the law against such interference or attacks.

As an American living in Europe, it has taken me some time to appreciate the European focus on privacy. I don't think people in the States understand the issues as well as people do here. OK, so your parents/grandparents lived through fascism: so what?

On a personal level, whether you're an industry insider, someone avoiding an abusive relationship, or an Earth Liberation Front activist, privacy is terribly important. It's not an exaggeration to say that to cede control over your privacy is to cede control over your identity.

But organizations that control your data on this level usually aren't stupid enough to let you know, or make you think about it. All that is left is a dull throb of database scandals and terms-of-service changes and wiretaps.

The problem is not the existence of malicious people: the problem is that the your data is out there. All it takes is one nosy person, or one controlling governmental agency (cf. A, B), or one corporation wanting to monetize (cf. all of them).

There are simply no safeguards. There is nothing you can do if you want to be a part of the modern web to protect your privacy. Your data on servers is always available to wiretap, and subpoena if necessary. Your data is not your own.

origins

Both of these problems (unpersonage and privacy violations) stem from the fact that you rely on someone else's computer to fulfill your personal needs. RMS wrote about this in an article entitled Who does that server really serve?, and I agree with all of his points.

Stormy Peters' recent article, 10 free apps I wish were open source, illustrates many of these misunderstandings. Besides the misleading terminology, what if Gmail were AGPL-free? Would that protect users against the recent Buzz fiasco? No, because users are not in control of the software they use. Users should be able to modify the software they run; if they cannot, due to that software running on another machine, they should not run software on another machine.

I don't think Richard's article goes far enough. As I mentioned above, the problem is that your data is just "out there". Let's postulate an AGPL Gmail that also allows me to run my own Gmail software on Google's network. While this would meet the Free Software definition, it still harms me as a user, because anyone who has access to that server has access to my data.

Besides that, there is the practical difficulty, in that Facebook or Google would never allow you access to the programs that run on your data in that way.

What I'm building up to is the idea that the client-server paradigm is fundamentally incompatible with autonomy. Growing your own food is better than sharecropping, better than "web 2.0".

what shall we do, sir wingo

A fundamental problem requires a radical (adj.: to the root) solution. As is often the case, the seed of a solution has been with us for a long time: public-key cryptography.

Geeks have long enjoyed mailing each other signed and/or encrypted mails, allowing private communication over insecure networks, relying on webs of trust to ensure the identity of the sender. Asymmetric cryptography allows you to send and receive private messages over insecure channels, like the internet.

I won't belabor the point, as most of my readers have seen GPG; it is the Right Thing. But what would GPG-style interactions mean in the context of Facebook?

All of you are probably cringing at this point, imagining the complexity and security implications. But let's bask in that moment for a while, shall we: if it were the case that fellow facebooklicans sent you private messages via GPG, being able to view them sensibly over the web would imply that the Facebook server would have your private key.

Extrapolating this farther, the very set of your "friends" is a kind of private data. If this data were properly encrypted and signed against your private key, to present the standard facebook view that most people know would again require your private key.

In the end, you can't have web services that access private data. Not if you want privacy, anyway.

an autonomous facebook?

To preserve the privacy of your identity, you should never send your private key over the wire. This is well-known. But if you are to do computation on your social network, as facebook.com does, then it follows that such computation must be done local to the user's machine.

But all of facebook on your local machine? Surely you're joking, Mr. Wingo! Well, yes and no. Obviously the answer is not "let's everyone download a program from facebook and run it locally with your private key as an argument". Not quite, anyway.

One good part of the so-called "web 2.0" is that I can code foo-anarchist-commune.org's web site in Scheme and no one is any the wiser. It's easy to deploy in today's environment; deploying e.g. a new facebook experience should not cause me to have to click something to install a new binary.

So, the constraints are:

My key pair is my identity. My public key may be distributed, but my private key must be private.

Since computation needs my private key, computation must happen locally. Viewing an "autonomous facebook" implies running a program on my local machine, with access to my private key.

Since an "autonomous facebook" would be useless without other people, I need access to other people's information, I need a network too.

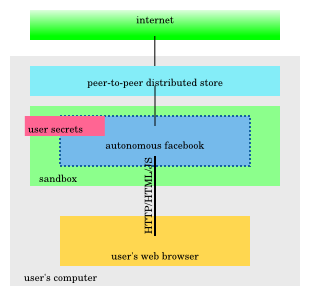

We can already draw a picture of what this looks like. Let's assume that the end-user experience is still via the web browser.

I think that my paranoid readers know where I'm going with this. My technically-minded readers will be flabbergast, perhaps, at the enormity of the problem of implementing facebook under such constraints. How does my facebook know that it's participating in a network? How does it know about my friend Leif? How does it get updates? Where is the database?

autonomous data model

Well, one thing is clear: someone needs to hold all of that data. Who to do it? In the case of my data (my photos, my messages to others, etc), I should be the one, as it makes me more autonomous. Everyone needs to seed their own data on the network.

I might choose to seed my data from multiple locations, for reliability. Beyond that, nodes might cache information that is routed through them.

One way to implement such a distributed store would be git-like, with content-based addressing and consistent hashing; or like bittorrent. It would have efficiency advantages. I thought for a while that this would be the solution, but GHM folk brought up the privacy argument, that your pattern of network access is too revealing.

So my current thought is to use GNUnet somehow. I'm not sure how this will go, but it's worth a try.

new operating system

Currently, to deploy a web application, you have to pay for servers and bandwidth, and this eventually causes your interests to diverge farther from that of your users. With an autonomous cloud, you could instead deploy web applications using the compute power and bandwidth of leaf nodes -- the power of the people using your software.

This would drastically lower the hacktivation energy for a new project. The little green sandbox above starts to approach a new kind of operating system, even -- a new program to run your programs.

Obviously, I'm thinking Guile would be a fine runtime for the sandbox, to run programs written for Guile -- in Ecmascript or Lua or Scheme or Elisp or whatever other languages people implement for Guile. The user would receive the source code, and running it would automatically compile it on their machine. The application source would also be available to modify and redistribute.

Having a sandbox for mobile code also raises the possibility of interesting mapreduce-type operations, to index the distributed data store.

Firefox could be modified with a plugin to add a new addressing mode, which would go through the HTTP server running locally to your machine. You would be browsing the "autonomous web".

Of course, since the whole thing is based on protocols, one might substitute the Guile environment for something else; or write an alternate interface to Facebook that works over a console or presents you with a native (e.g., Clutter) interface.

related work

There have been loads of people thinking these ideas; none of them is new.

My ongoing use of the term "autonomous" is a nod to anarchists, and to the autonomo.us group. Autonomo.us would be a great organizing place for work around this, but their list server is a bit moribund; somewhat ironic. Perhaps we can return life to that group, though.

GNU Social is a project to make a free-as-in-freedom social network. I think it's a great initiative, and it's probably the place to go if you want to build an alternative to Facebook right now.

GNU Social has made the decision to just get something working. This is the right thing to do, IMO; but near-term solutions should not prevent concurrent research for the long-term. In the end if making an autonomous cloud turns out to be possible, perhaps we can rebase GNU Social on top of the autonomous infrastructure.

Is there something else I should really be looking at? Let me know! I don't think one can ever do a full survey of this field -- better to just start hacking -- but I'm interested in good ideas, especially to the data storage and access problem.

plan

All of this is a bit pie-in-the-sky, but I am going to see if I can work up a proof-of-concept for the upcoming GHM in July. If you are interested in helping this project, probably the best thing to do is to code up some little demo application using GNUnet or some other store. Once you have that, drop by to see me in #guile and we'll talk.

Comments welcome!